TfT Performance: Methodology

This is the first article in a series looking at the performance of current applications for Personal Knowledge Management - so-called "Tools for Thought."

Introduction

If you want to get realistic results from performance tests, you must create natural conditions. If you're going to compare different applications, you have to use the same data. I've chosen synthetic test data over a copy of one of my personal knowledge graphs to ensure this.

A lightweight Python script that I developed generates the synthetic test data.

The program flow is straightforward and shown below; start reading in the upper-left.

To create realistic-looking pages, we need:

A dictionary with English words and an algorithm to select them

Some probabilistic functions which we use to create a certain amount of fuzziness in the length of paragraphs and sentences

An algorithm to generate page links to other documents

Creating Words

I use a dictionary from Wikiquote containing the 10.000 most-common words in Project Gutenberg. The good thing is that this dictionary already supplies the frequency of the words in the different books and texts, providing a perfect basis for the word generation algorithm.

As you can see, the frequency has already dropped significantly for the first ten words.

Across all 10.000 words, the frequency drops inverse exponential.

Across all 10.000 words, the frequency drops inverse exponential.

We do this based on the frequency shown above when combining selected words into sentences. Therefore we make sure that we much more likely put a "and" or "that" into a sentence than a "purified" or "canton".

We don't want to be every sentence to have the same length. Therefore we need a probabilistic function to create some randomness.

Gaussian Distribution

I've chosen a gaussian distribution with a mean of seven and a standard deviation of 1. As you can see in the graph, most of the numbers will be between 4 and 10, with seven as the most frequent value.

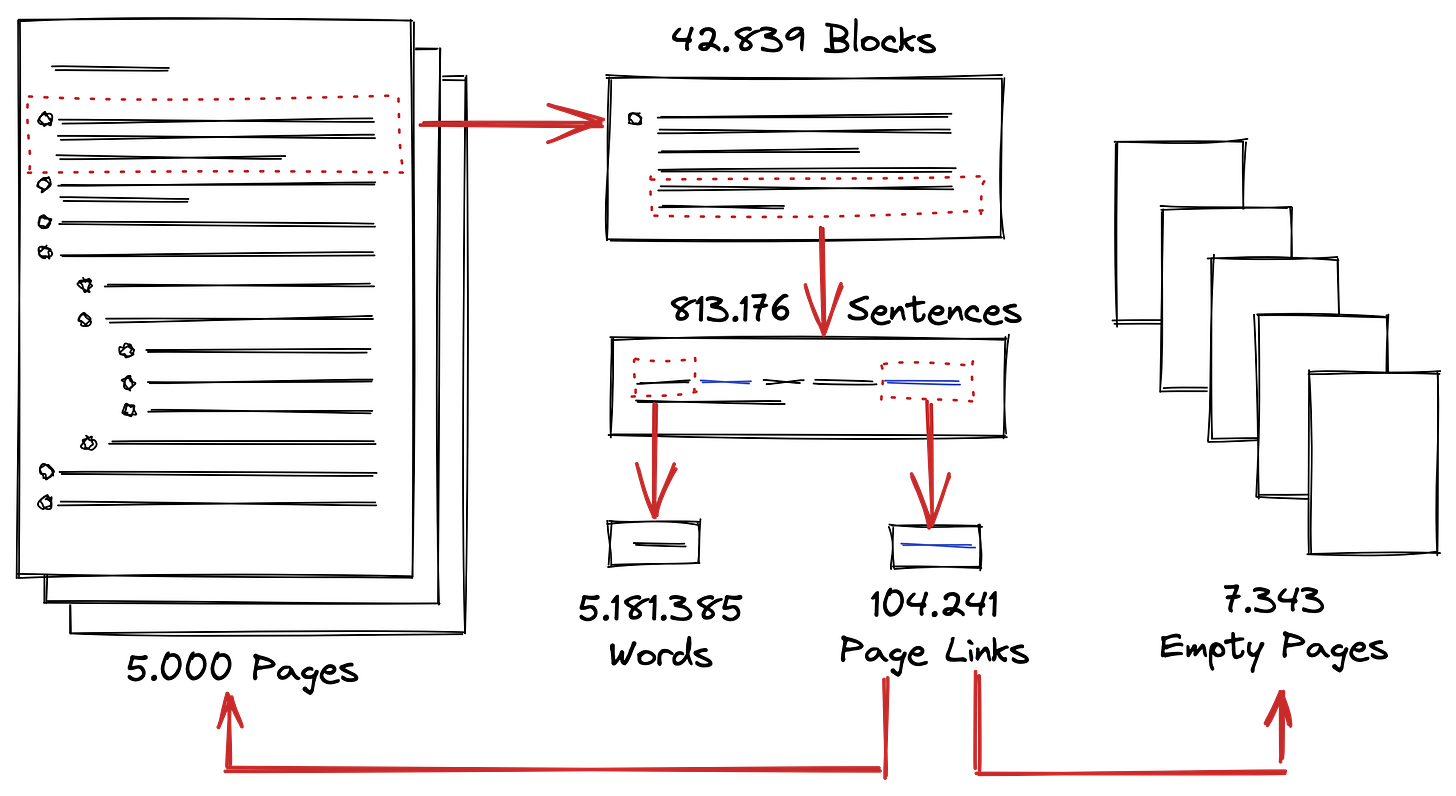

So each time we create a sentence, we randomize the number of words. Each time we make a block, we randomize the number of sentences (and sometimes ident some of them). And each time we create a page, we randomize the number of blocks. We created 5.000 such pages.

Creating Links

I've chosen a uniform distribution for creating links. The chance of making one while generating the sentences is 1:50, equally distributed between a two- and a one-word link.

I didn't want links on the most common words or too many different ones. When looking at my graphs, it shows that there are always a few terms that gather many backlinks on themselves.

So I used the frequency distribution above but moved the words by 1.500 places. So the most frequent word won't be "the" anymore but "dressed," followed by "lifted" and "hopes."

Combining it all together

When we combine all of this, we get a decent set of test data. Either as markdown files or as a Roam Research compatible JSON File.

This is how the generated pages look in Roam Research and in Logseq.

Downloads

You can download the files here if you want to experiment with my test data.

We'll look at the competitors and the task course in the next post.

If you have any questions or suggestions, please leave a comment.

If you want to support my work, you can do this by becoming a paid member:

Or you can buy me a coffee. Thanks in advance.