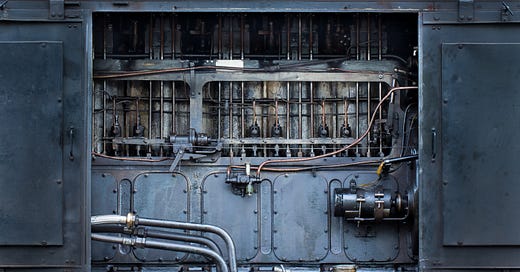

TfT Performance: Machine Room

In the meantime - in the engine room - I have thought about the benchmark procedure and made necessary preparations.

Hej and welcome to this small interlude, where I talk about the task course, test data, test environment, and some pitfalls with formats and import limits before diving into the first results.

Preface

I've been thinking a lot about how to evaluate the performance of the tools best. In everyday use, you rarely come up against limits. And whether an application starts in 0.3 or 0.6 or 1.1 seconds or searching takes one or two blinks of an eye, it might be annoying but doesn't get us anywhere (and is hard to measure reliably).

I was more concerned with how the applications behave when we have been actively collecting and linking information over many years.

My current everyday database has around 3,000 pages with roughly 500,000 words and 15.000 interconnections - I would consider it mediocre. So I thought I'd raise the bar a bit.

Test Data

In the last article, I already provided some background around the generated test data. The biggest of my current sets contains 10,000 pages and 9,330,186 …

Keep reading with a 7-day free trial

Subscribe to Gödel's to keep reading this post and get 7 days of free access to the full post archives.